Running an LLM locally on Pop!_OS with ROCm support

Running ROCm on Pop!

This has gotten sooo much easier than when I tried to set this up 2 years ago. ROCm has come a long way, but the support

and tooling has advanced as well. Now you don’t have to jump through tons of hoops to get AI libraries and software to

work with ROCm. The last time I tried this, I needed to add ubuntu repos, edit my /etc/os-release file to pretend I was

using ubuntu", and do a rain-dance to get my Raedeon 6900xt in a usable state.

Then once that was done, you realize that none of the software and libraries incorporated ROCm support and get really sad that you sold your Nvidia GFX card because “radeon works better on linux”. Basically, 2 years ago, getting ROCm setup was a pain in the ass and your only reward was buggy or nonfunctioning software since the AI/ML world is built on CUDA. Now it’s much better with ROCm supporting CUDA and/or most of the libraries incorporating ROCm. Things like pytorch now support AMD ROCm natively.

Getting ROCm installed on Pop!_OS has been simplified to copying and pasting some commands:

wget https://repo.radeon.com/rocm/rocm.gpg.key -O - | gpg --dearmor | sudo tee /etc/apt/keyrings/rocm.gpg > /dev/null

echo "deb [arch=amd64 signed-by=/etc/apt/keyrings/rocm.gpg] https://repo.radeon.com/rocm/apt/6.1 jammy main" | sudo tee

--append /etc/apt/sources.list.d/rocm.list

echo -e 'Package: *\nPin: release o=repo.radeon.com\nPin-Priority: 600' | sudo tee /etc/apt/preferences.d/rocm-pin-600

Then update and install:

sudo apt update && sudo apt install rocm

Add user to the ‘render’ group so your user profile has permission to use it.

sudo usermod -a -G render $USER

You can confirm it all works with:

rocminfo

Installing the actual language models:

Since we have ROCm installed and working properly, let’s install a local LLM. I decided to use Ollama, which is Meta’s open source model. Ollama.ai actually has a script that helps get it all up-and-running quickly. They even have a Linux version.

I hate that this has become a common practice in the *nix community, because it’s such a huge security risk, but they offer a script you can run if you copy and paste this command:

curl -fsSL https://ollama.com/install.sh | sh

Only do this if you have reviewed the script and/or can REALLY trust the source. You are downloading a script from the internet and piping it into your shell to run.

There are manual steps here, though, that you can follow if you don’t want to use the script: https://github.com/ollama/ollama/blob/main/docs/linux.md

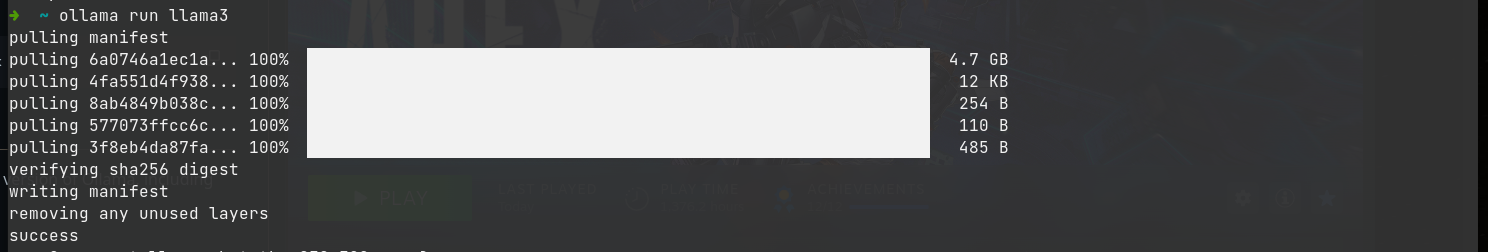

I ran the script (after I reviewed it!). If you’ve done everything properly, you should be able to type:

ollama run llama3

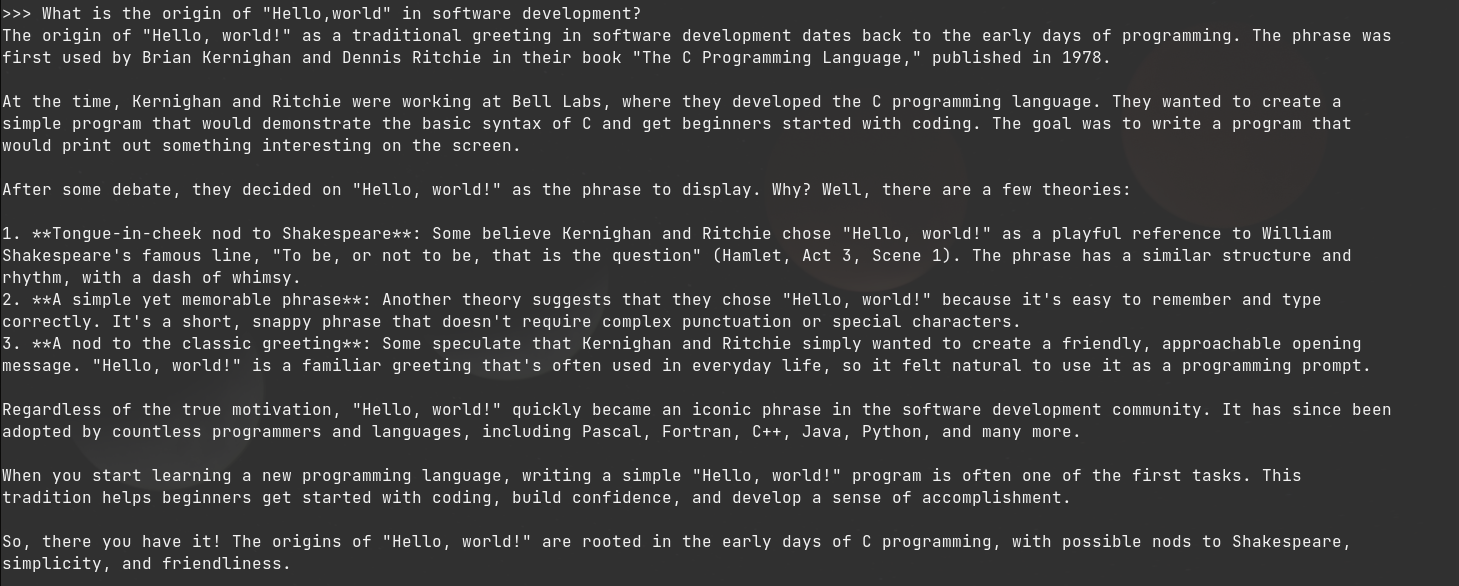

The first time you run this it will download llama3, which can take a bit, but it will drop you into a prompt. Where

you can talk to it like you would ChatGPT or any other LLM.

And thats that! A working LLM on Pop!_OS using ROCm on your Radeon GFX card! The next step is adding a more user friendly, ChatGPT-like interface for it. This way you can discover and switch models easily, save your history, and easily set system prompts and your own guardrails–all locally, and privately. That will be on the next post when I have some time to tinker with it.