Using open-webui as a local ChatGPT replacement

Using open-webui

I set up a local LLM using ROCm on my desktop in my last post. So I have the LLM running locally, but its pretty clunky and as a paying user of ChatGPT, I want that cleaner UI/UX. Enter open-webui. This is exactly what I was looking for as a front-end for the ollama server I set up.

I decided to go with the Docker setup.

Since I fairly recently refreshed my install for Pop, I realized I didn’t have Docker installed on my machine anymore. Setting up Docker on Pop is straightforward:

Install Docker

# Add Docker's official GPG key:

sudo apt-get update

sudo apt-get install ca-certificates curl

sudo install -m 0755 -d /etc/apt/keyrings

sudo curl -fsSL https://download.docker.com/linux/ubuntu/gpg -o /etc/apt/keyrings/docker.asc

sudo chmod a+r /etc/apt/keyrings/docker.asc

# Add the repository to Apt sources:

echo \

"deb [arch=$(dpkg --print-architecture) signed-by=/etc/apt/keyrings/docker.asc] https://download.docker.com/linux/ubuntu \

$(. /etc/os-release && echo "$VERSION_CODENAME") stable" | \

sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

sudo apt-get update

sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

You can check that Docker is installed by running docker --version. I prefer Docker Compose when I run containers.

Docker Compose Configuration

This is what my compose file looks like:

version: '3.8' # Specify the version of Docker Compose

services:

open-webui:

image: ghcr.io/open-webui/open-webui:main

container_name: open-webui

environment:

- OLLAMA_BASE_URL=http://127.0.0.1:11434

volumes:

- /location/on/host/tosavedata:/app/backend/data

network_mode: host

restart: always

At this point, when you go to localhost:8080 you will see the web front end.

The Interface

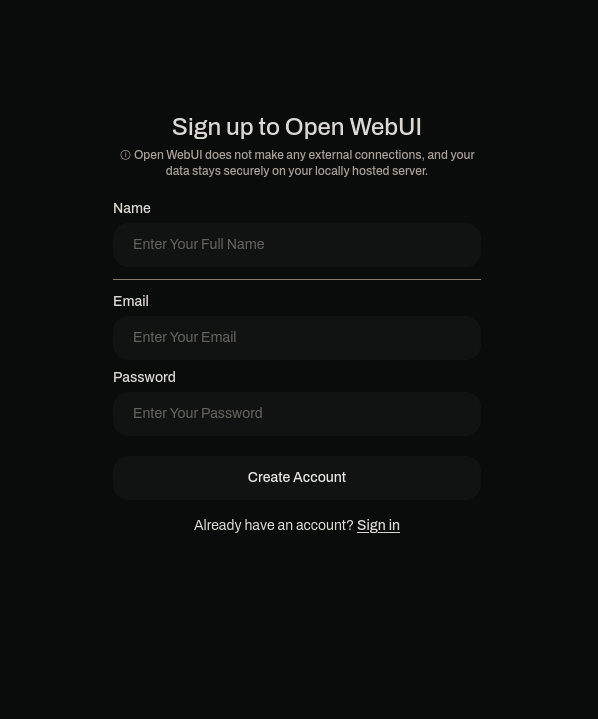

The service makes you create an account. Its all local. Nothing is sent anywhere. There is a flag you can set when you spin up your docker container, but I decided to leave it this way since I may eventually set this up so my wife can use it too.

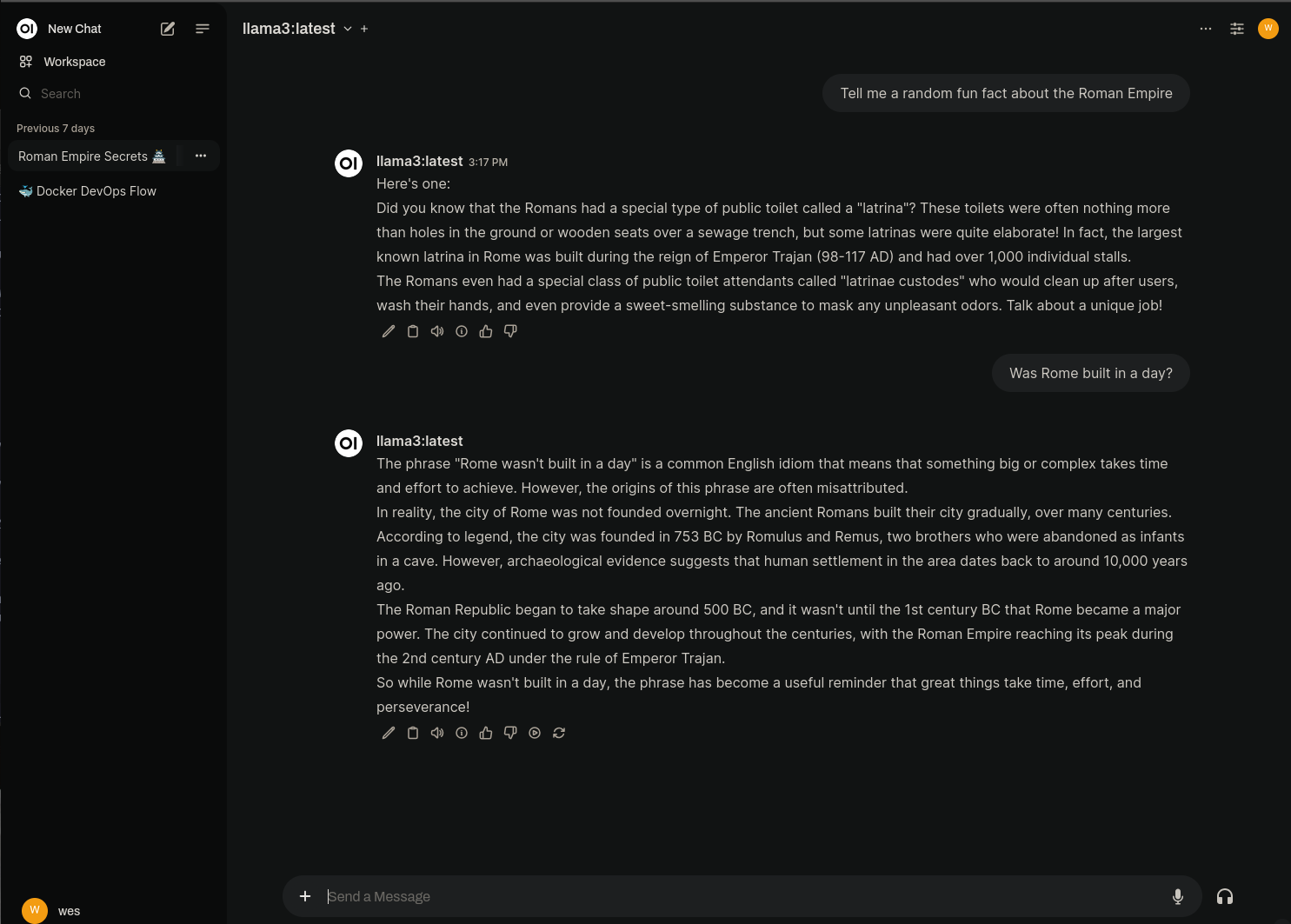

The interface is very familiar if you’ve used ChatGPT before. Up at the top you can select your model to use. Its worth setting a default. It doesn’t make you set a default, but it gets pretty annoying to open a new chat and have to manually pick a model each time. In my opinion, they should have it select a default on first use, but its not a huge deal at the end of the day.

And that’s really it. Now you have a ChatGPT like UX that lets you quickly switch between models, remembers your chats if you need to go back and reference them, and its all running locally.

I plan on running LM Studio as well. Its a little more conducive to ‘playing’ with the models and prompts and it gives easier access to a lot of the uncensored models that don’t have the nanny protections built in. I also plan on setting up a RAG (Retrieval Augmented Generation)with some of my own data to see how useful I can make all of this.